Mastering Infrastructure as a Service with AWS

“The cloud is not about how you build infrastructure. It’s about how you use the flexibility it provides.”

Let’s face it – in today’s fast-paced digital world, cloud computing has evolved from just another tech buzzword into the very foundation of modern IT infrastructure. Look beyond the marketing hype, and you’ll discover a truly powerful concept that’s completely changing how businesses operate and scale their technology.

The Power of Cloud Computing

Think about it – cloud computing gives you on-demand access to computing resources whenever you need them. Need applications? Data centers? It’s all available over the internet, and you only pay for what you use. Remember the days of massive upfront server investments? That’s history! This clever shift from capital expenditure to operational expenditure has democratized access to enterprise-grade technology, making it accessible to businesses of all sizes. Even small startups can now leverage the same advanced infrastructure that was once the exclusive domain of tech giants.

Understanding IaaS: The Foundation of Cloud Architecture

You’ve probably heard about the “cloud service models” – (SaaS, PaaS, and IaaS). While they’re all important, let’s focus on Infrastructure as a Service (IaaS) – it’s truly the most fundamental layer of them all. Think of IaaS as providing the essential building blocks for your cloud IT – the digital equivalent of the concrete, steel, and electrical systems of a physical building:

| Resource Type | What You Get | Traditional Equivalent |

|---|---|---|

| Compute | Virtual machines with configurable CPU and RAM | Physical servers in a data center |

| Storage | Block, object, and file storage options | SANs, NAS devices, and disk arrays |

| Networking | Virtual networks, subnets, IP addresses, load balancers | Routers, switches, firewalls, and load balancers |

| Security | Identity management, access control, encryption | Physical security, firewalls, and network policies |

Here’s what makes IaaS so powerful: it offers you the highest level of flexibility and management control over your IT resources. Instead of dropping a fortune on hardware that might be obsolete next year, you can rent exactly what you need, precisely when you need it. Need more power during holiday shopping season? Scale up. Slower summer months? Scale down. All this flexibility while still maintaining complete control over how everything is configured and managed. It’s your infrastructure, just without the headaches of physical ownership.

AWS: The Pioneer of Cloud IaaS

Let’s talk about the elephant in the room when it comes to IaaS providers – Amazon Web Services (AWS). AWS isn’t just another player; they’re the pioneer who blazed the trail and remain the industry leader today. When AWS launched back in 2006, they didn’t just enter the market – they fundamentally changed the entire game of how businesses approach IT infrastructure. Look at what they bring to the table:

- An incredible lineup of over 200 fully featured services you can access from practically anywhere on Earth

- A massive global footprint spanning 27 geographic regions with 87 availability zones – meaning your applications can be closer to your users

- Rock-solid security capabilities and compliance certifications that let you sleep at night

- Unmatched scale and reliability whether you’re a scrappy startup or a Fortune 500 giant

Ready to dive in? Throughout this comprehensive guide, I’ll show you how to harness AWS’s powerful IaaS offerings to build cloud solutions that are robust, scalable, and cost-effective – the kind that can truly transform what your infrastructure can do.

What We’ll Cover

| Section | Key Learning |

|---|---|

| Deploying Web Applications | Building scalable infrastructure with EC2, ALB, and ASG |

| Path-Based Routing | Directing traffic to different services using ALB rules |

| Advanced Networking | Implementing private/public subnets with secure bastion access |

| Domain Configuration | Setting up end-to-end routing for multi-port applications with Route 53 |

| Cross-Account VPC Peering | Establishing secure connectivity between AWS accounts |

| EBS & AMI Management | Creating snapshots and images for data persistence |

| IAM Policy Creation | Implementing fine-grained access control for EC2 |

| S3 Bucket Operations | Managing storage using AWS CLI |

Deploying a Web Application on AWS

What You’ll Learn

- Setting up EC2 instances and installing web server software

- Creating an Application Load Balancer for traffic distribution

- Configuring Auto Scaling Groups to automatically adjust capacity

- Testing and verifying scaling behavior under load

In this section, we’re going to roll up our sleeves and build something special – a truly resilient and scalable web application infrastructure on AWS. We’ll bring together multiple IaaS components like pieces of a puzzle to create a robust architecture that doesn’t just handle traffic but automatically adjusts to demands. No more late-night scrambles when your site hits the front page of Reddit!

flowchart TD

User([User]) -->|HTTP Request| ALB[Application Load Balancer]

ALB -->|Traffic Distribution| ASG{Auto Scaling Group}

ASG -->|Scales Out| EC2_1[EC2 Instance 1]

ASG -->|Scales Out| EC2_2[EC2 Instance 2]

ASG -->|Scales Out| EC2_N[EC2 Instance N]

CloudWatch[CloudWatch Metrics] -->|Triggers| ASG

EC2_1 -->|Reports Metrics| CloudWatch

EC2_2 -->|Reports Metrics| CloudWatch

EC2_N -->|Reports Metrics| CloudWatch

classDef aws fill:#FF9900,stroke:#232F3E,color:white

class User,ALB,ASG,EC2_1,EC2_2,EC2_N,CloudWatch aws

Key Concepts

| Concept | Description |

|---|---|

| EC2 (Elastic Compute Cloud) | Virtual servers in the cloud that provide resizable compute capacity |

| ALB (Application Load Balancer) | Distributes incoming application traffic across multiple targets |

| ASG (Auto Scaling Group) | Maintains application availability by automatically adding or removing EC2 instances |

| AMI (Amazon Machine Image) | Pre-configured templates used for EC2 instance creation |

| Launch Template | Contains configuration information to launch instances in an ASG |

Task 1: Deploy Web Application on EC2 Instances

Steps:

-

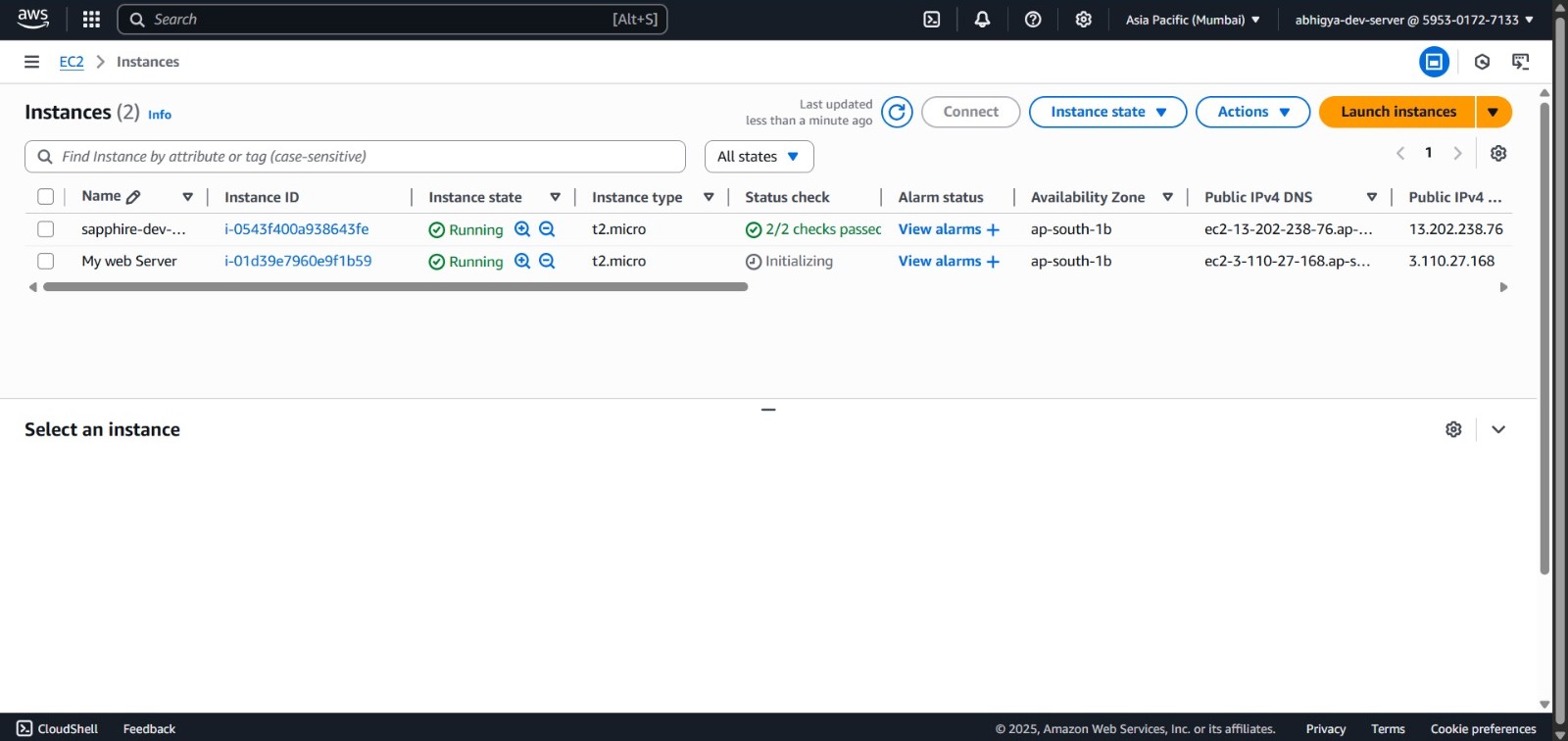

Launch EC2 Instances:

- Navigate to EC2 dashboard > Launch Instances

- Select Ubuntu Server 22.04 LTS, choose

t2.micro(free tier eligible) - Configure network settings with security groups allowing HTTP/SSH

- Launch at least two instances across different AZs with a key pair

-

Connect and Configure Instances:

ssh -i "your-key.pem" ubuntu@instance-public-ip sudo apt update sudo apt install -y nginx sudo systemctl start nginx sudo systemctl enable nginx -

Deploy Application:

sudo tee /var/www/html/index.html > /dev/null << EOF <!DOCTYPE html> <html> <head> <title>AWS Web Application</title> </head> <body> <div class="container"> <h1>Hello from <span class="aws-color">AWS</span>!</h1> <h3>Instance ID: $(curl -s http://169.254.169.254/latest/meta-data/instance-id)</h3> <p>This page is being served from an Amazon EC2 instance.</p> </div> </body> </html> EOF

-

Verify Deployment:

- Access each instance’s public IP/DNS in a browser

- Confirm the application is running correctly

Task 2: Set Up an Application Load Balancer (ALB)

Steps:

-

Create Target Group:

- In the AWS Management Console, navigate to the EC2 dashboard

- From the left navigation pane, select “Target Groups” under the “Load Balancing” section

- Click the “Create target group” button

- For target type, select “Instances” (since we’re routing to EC2 instances)

- Enter

web-target-groupin the “Target group name” field - Ensure Protocol is set to HTTP and Port is set to 80

- Select your VPC from the dropdown menu

- In the Health check settings section:

- Confirm the protocol is HTTP

- For Health check path, enter

/(this is the root path of your web server) - You can keep default values for other health check settings, or adjust as needed:

- Healthy threshold: 5 (consecutive successful checks required)

- Unhealthy threshold: 2 (consecutive failed checks to mark unhealthy)

- Timeout: 5 seconds (time to wait for response)

- Interval: 30 seconds (time between health checks)

- Click “Next” to proceed to target registration

- Select the EC2 instances you created in Task 1

- Click “Include as pending below” to add them to the target group

- Verify the instances appear in the list with port 80

- Click “Create target group” to finalize

-

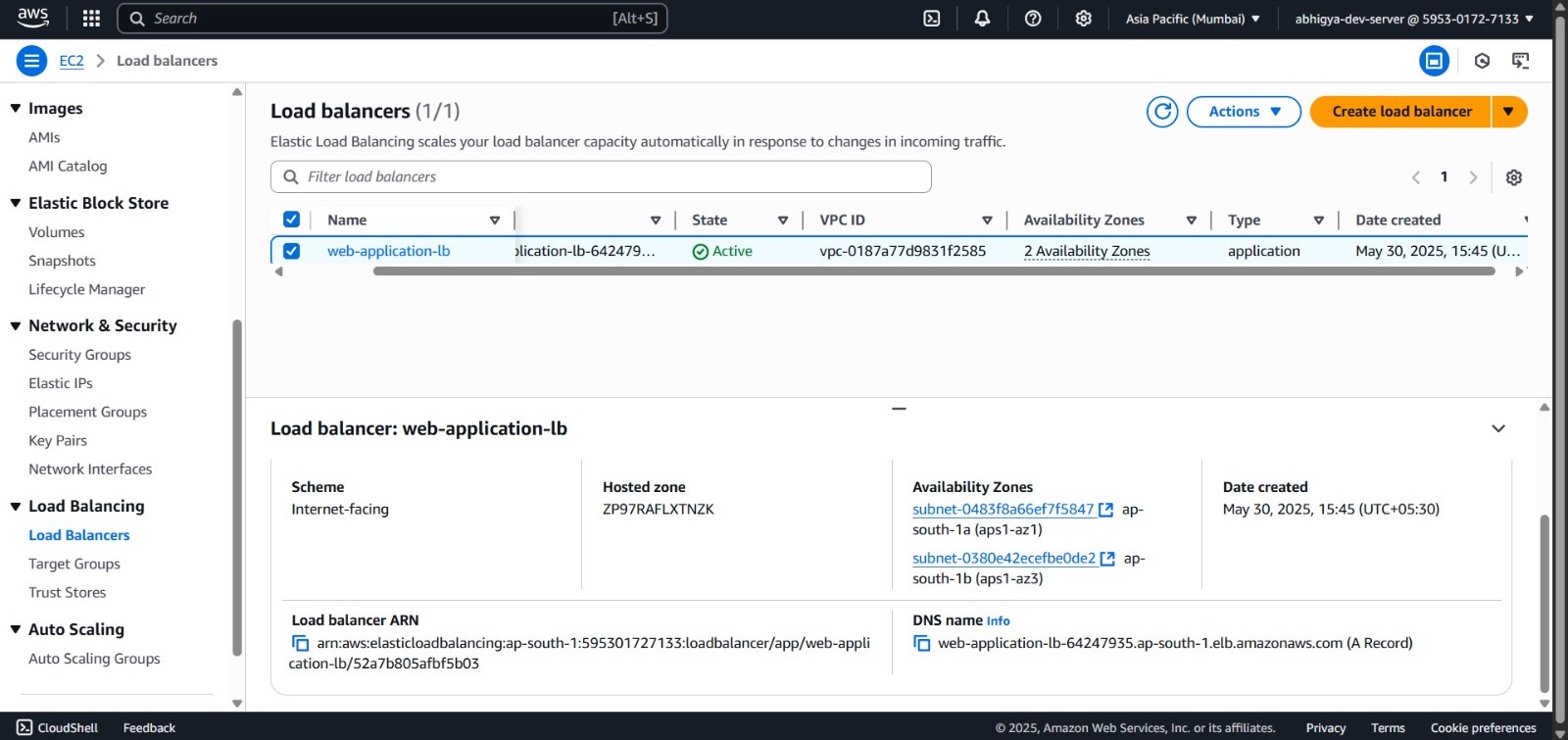

Create Load Balancer:

- Navigate to the EC2 dashboard in your AWS console

- In the left navigation menu, click on “Load Balancers” then click “Create load balancer”

- Select “Application Load Balancer” from the options (this type handles HTTP/HTTPS traffic)

- For the basic configuration:

- Name: Enter

web-application-lbas your load balancer name - Scheme: Select “Internet-facing” (this makes your ALB accessible from the internet)

- IP address type: Leave as “IPv4”

- Name: Enter

- For Network settings:

- Select your VPC from the dropdown

- Expand “Mappings” and select at least two different Availability Zones with public subnets

- This redundancy ensures high availability even if one AZ fails

- For Security groups:

- Create or select a security group that allows inbound HTTP (port 80) traffic

- Make sure the security group allows traffic from your expected sources (0.0.0.0/0 for public access)

- For Listeners and routing:

- Confirm the default HTTP:80 listener is added

- In the “Default action” section, select “Forward to target group” and choose your

web-target-group

- Review settings and click “Create load balancer”

-

Verify Load Balancer:

- After creation, wait for the ALB to transition from “Provisioning” to “Active” state (~2-5 minutes)

- Once active, locate and copy the ALB’s DNS name from the “Description” tab (it will look like

web-application-lb-1234567890.ap-south-1.elb.amazonaws.com) - Open a new browser tab and paste this DNS name into the address bar

- You should see your web application page load successfully

- Try reloading the page multiple times (press F5 or Ctrl+R) and observe the “Instance ID” value changing

- This confirms that your load balancer is correctly distributing traffic across your EC2 instances

- If the page doesn’t load, check that your:

- Target group has healthy instances (Target groups > your target group > Targets tab)

- Security groups are correctly configured (allowing HTTP on port 80)

- EC2 instances are running properly (can be accessed directly via their IP)

Task 3: Configure Auto Scaling Group (ASG)

Steps:

-

Create Launch Template:

- Return to the EC2 dashboard in AWS Management Console

- In the left navigation pane under “Instances”, click on “Launch Templates”

- Click the “Create launch template” button

- For the basic settings:

- Launch template name: Enter

web-launch-template - Description: Add “Template for web server auto scaling group” (descriptions help track resources)

- Check “Provide guidance to help me set up a template that I can use with EC2 Auto Scaling”

- Launch template name: Enter

- For Application and OS Images:

- Click “Browse more AMIs” and select the same Ubuntu Server 22.04 LTS AMI used previously

- Note the AMI ID for consistency (should match your existing instances)

- For Instance type:

- Select the same instance type as your existing instances (e.g.,

t2.micro)

- Select the same instance type as your existing instances (e.g.,

- For Key pair:

- Select the same key pair you used for your initial instances

- For Network settings:

- Select “Don’t include in launch template” for network interface (ASG will configure this later)

- For Security groups:

- Select the security group that allows HTTP (80) and SSH (22) access

- Ensure it’s the same one used for your existing web instances for consistency

- Under Advanced details:

- Scroll down to the “User data” section

- Select “Text” and paste the following script (this will run on instance launch)

#!/bin/bash sudo apt update sudo apt install -y nginx sudo systemctl start nginx sudo systemctl enable nginx # Create custom index page sudo tee /var/www/html/index.html > /dev/null << EOF <!DOCTYPE html> <html> <head> <title>AWS Web Application</title> </head> <body> <div class="container"> <h1>Hello from <span class="aws-color">AWS</span>!</h1> <h3>Instance ID: \$(curl -s http://169.254.169.254/latest/meta-data/instance-id)</h3> <p>This page is being served from an Amazon EC2 instance.</p> </div> </body> </html> EOF -

Create Auto Scaling Group:

- In the EC2 dashboard, navigate to “Auto Scaling Groups” under “Auto Scaling” in the left menu

- Click “Create Auto Scaling group” button

- For Step 1 (Choose launch template or configuration):

- Enter

web-asgas the Auto Scaling group name - Select the

web-launch-templateyou just created - Ensure the default version is selected (or choose a specific version)

- Click “Next”

- Enter

- For Step 2 (Choose instance launch options):

- Select your VPC from the dropdown menu

- Under “Availability Zones and subnets”, select multiple subnets across different AZs

- Select at least two different Availability Zones for high availability

- Click “Next”

- For Step 3 (Configure advanced options):

- Under “Load balancing”, select “Attach to an existing load balancer”

- Choose “Choose from your load balancer target groups”

- Select the

web-target-groupyou created earlier - Under “Health checks”, leave the “EC2” health check enabled

- Enable “ELB” health checks by checking the box

- Leave “Health check grace period” as 300 seconds (this gives instances time to boot)

- Click “Next”

- For Step 4 (Configure group size and scaling policies):

- Set the following group sizes:

- Desired capacity: 2 instances

- Minimum capacity: 2 instances (ensures at least 2 are always running)

- Maximum capacity: 6 instances (allows scaling up to 6 under load)

- Under “Scaling policies”, select “Target tracking scaling policy”

- Enter a policy name like “CPU-Based-Scaling”

- Select “Average CPU utilization” as the metric type

- Set target value to 70% (will add instances when average CPU exceeds 70%)

- Set instance warmup to 300 seconds (time for new instances to be included in metrics)

- Click “Next”

- Set the following group sizes:

- For Step 5 (Add notifications - Optional):

- You can configure SNS notifications or skip this step

- Click “Next”

- For Step 6 (Add tags - Optional):

- Add a “Name” tag with value “ASG-Managed-Instance” to help identify these instances

- Click “Next”

- For Step 7 (Review):

- Review all settings

- Click “Create Auto Scaling group”

-

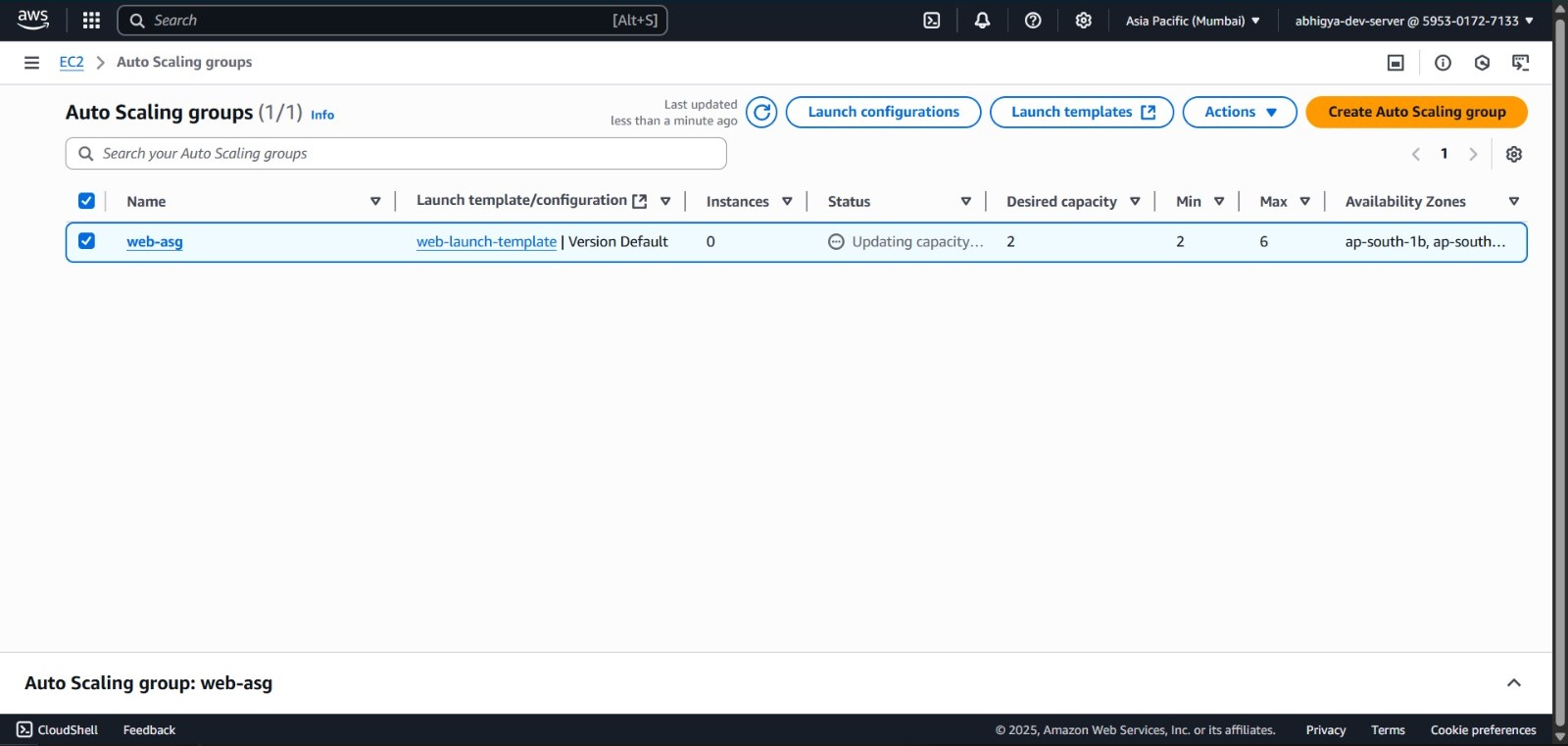

Verify ASG Configuration:

- After creating the ASG, navigate to the “Auto Scaling Groups” page

- Click on your newly created

web-asg - In the bottom pane, click the “Activity” tab to monitor launch progress

- You should see instances being launched (it may take 1-3 minutes)

- Check the “Instance management” tab to verify:

- Your desired capacity (2) matches the number of running instances

- The “Lifecycle” column shows “InService” for launched instances

- The “Health status” column shows “Healthy” for all instances

- Navigate to the “Target Groups” section and select your

web-target-group - Under the “Targets” tab, verify:

- The newly launched instances appear in the list

- Their health status shows as “healthy” (might take a few minutes)

- The “Status” column displays “registered” for all instances

- Test the setup by accessing your ALB DNS name in a browser again

- Refresh multiple times to ensure traffic is being directed to the ASG-managed instances

Task 4: Test Scaling Behavior

Steps:

-

Generate Load:

- Launch a separate EC2 instance to serve as your testing machine (or use an existing one)

- Connect to this instance via SSH:

# Replace with your key path and instance's public IP ssh -i "your-key.pem" ubuntu@test-instance-public-ip- Update package repositories and install testing tools:

# Update package lists and install Apache Bench (ab) and stress tool sudo apt update sudo apt install -y apache2-utils stress- Generate HTTP load using Apache Bench:

# This sends 10,000 requests with 100 concurrent connections # Replace with your actual ALB DNS name ab -n 10000 -c 100 http://your-alb-dns-name/- For more aggressive CPU stress testing (run in a separate terminal session):

# Stress all CPU cores at 100% for 5 minutes stress --cpu $(nproc) --timeout 300s -

Monitor Scaling Activities:

- Return to the AWS Management Console while your load test is running

- Navigate to EC2 > Auto Scaling Groups > select your

web-asg - Click on the “Activity” tab to observe launch activities in real-time:

- You should see “Starting a new instance” entries as the load increases

- Each entry will include a status message (e.g., “Successful”) and timestamp

- The “Cause” column explains why the instance was launched (e.g., “CPU utilization above target”)

- Click on the “Monitoring” tab to view real-time metrics:

- Locate the “CPU Utilization” graph and observe how it rises as load increases

- Track the “Group desired capacity” graph to see how ASG adjusts instance count

- For more detailed metrics, click the “CloudWatch” button in the “Monitoring” tab:

- This opens CloudWatch in a new tab with pre-filtered metrics for your ASG

- You can add graphs for CPU utilization, network traffic, and other metrics

- Try setting the time range to “1H” (last hour) for a clearer view of scaling events

- Return to EC2 > Instances to observe:

- New instances appearing with the “ASG-Managed-Instance” tag

- Instance states changing from “pending” to “running”

- The total count of running instances increasing based on load

-

Verify Application Availability:

- While the load testing and scaling activities are in progress, open a separate browser window

- Enter your ALB’s DNS name in the address bar (e.g.,

web-application-lb-1234567890.ap-south-1.elb.amazonaws.com) - Refresh the page multiple times (F5 or Ctrl+R) at different intervals

- Observe the following:

- The page should continue to load successfully without errors

- Response times should remain relatively stable

- The Instance ID shown on the page should change occasionally as requests are balanced across instances

- You may notice new instance IDs appearing as ASG launches additional instances

- Try this from different devices or networks if possible to simulate real user traffic

- Use browser developer tools (F12) to monitor network response times

- If using Chrome, you can open the Network tab and check the “Disable cache” option to ensure fresh requests

- Continue monitoring for at least 5-10 minutes to verify sustained availability

-

Observe Scale-In:

- After your load test has been running for a while (10-15 minutes), press Ctrl+C to terminate the load-generating tools

- In your SSH terminal, confirm all stress tests are stopped:

# Check if any stress processes are still running ps aux | grep stress # If needed, kill any remaining stress processes killall stress- Return to the AWS Management Console and navigate back to EC2 > Auto Scaling Groups > your

web-asg - Switch to the “Activity” tab and continue monitoring:

- Wait approximately 5-15 minutes (depending on your cooldown period)

- You should start seeing “Terminating EC2 instance” entries appear

- Each entry will include details about which instance is being terminated and why

- On the “Monitoring” tab, observe:

- The “CPU Utilization” graph should show a gradual decline

- The “Group desired capacity” graph should decrease in steps

- Eventually, your ASG should return to the minimum capacity (2 instances)

- On the “Instance management” tab, watch as:

- Instances status changes from “InService” to “Terminating” to being removed

- The termination happens gradually, not all at once (to prevent service disruption)

- Continue refreshing your website in the browser to confirm it remains available during scale-in

- This demonstrates the full auto-scaling lifecycle: scaling out under load and scaling in during quiet periods

What have we accomplished? We’ve built a rock-solid architecture that keeps your application humming along even when traffic suddenly spikes – like when that marketing campaign finally goes viral! And the best part? It optimizes costs by automatically scaling down during quieter periods. No more paying for idle servers at 3 AM!

Pro Tip: Always create your EC2 instances across multiple Availability Zones. Think of it as not keeping all your eggs in one basket. If one AZ has a hiccup (and yes, it happens!), your application keeps running smoothly from instances in other AZs. Your users won’t even notice the difference. Learn more about AWS high availability best practices.

Path-based Routing in AWS Application Load Balancer

What You’ll Learn

- Creating and configuring target groups for different applications

- Implementing path-based routing rules in an ALB

- Testing and verifying routing configuration

- Managing multiple services behind a single load balancer

Ever wondered how major websites serve different parts of their application from one domain? That’s where path-based

routing comes in! It’s

like having a smart traffic cop that directs visitors to different backend services based on the URL path they’re

requesting. Want to visit /api? You’ll be sent to the API servers. Looking for /blog? You’ll get routed to the blog

servers. This approach is incredibly handy when you’re running microservices

or need to manage multiple application components while presenting them to users under a single, clean URL.

graph TD

User([User]) -->|Request| DNS[DNS: app.example.com]

DNS -->|Routes to| ALB[Application Load Balancer]

ALB -->|Path: /api/*| APIRule{API Route Rule}

ALB -->|Path: /blog/*| BlogRule{Blog Route Rule}

ALB -->|Path: /admin/*| AdminRule{Admin Route Rule}

ALB -->|Path: /* default| DefaultRule{Default Rule}

APIRule -->|Target Group 1| APIServers[API Servers]

BlogRule -->|Target Group 2| BlogServers[Blog Servers]

AdminRule -->|Target Group 3| AdminServers[Admin Dashboard]

DefaultRule -->|Target Group 4| WebServers[Web Application]

classDef user fill:#ECEFF1,stroke:#607D8B,color:black

classDef alb fill:#FF9900,stroke:#E65100,color:white

classDef rule fill:#42A5F5,stroke:#0D47A1,color:white

classDef servers fill:#66BB6A,stroke:#2E7D32,color:white

class User user

class ALB,DNS alb

class APIRule,BlogRule,AdminRule,DefaultRule rule

class APIServers,BlogServers,AdminServers,WebServers servers

Key Concepts

| Concept | Description |

|---|---|

| Listener | Process that checks for connection requests using a specified protocol and port |

| Target Group | Group of resources (EC2 instances, containers, IP addresses) that receive traffic |

| Routing Rules | Conditions that determine how requests are forwarded to target groups |

| Path Pattern | URL pattern used to match and route requests to specific target groups |

| Rule Priority | Determines the order in which rules are evaluated (lowest number first) |

Task 1: Configure Load Balancer and Target Groups

Steps:

-

Create or Use Existing ALB:

- Log into the AWS Management Console at https://console.aws.amazon.com

- Navigate to the EC2 dashboard by clicking “Services” at the top, then selecting “EC2” under “Compute”

- In the left navigation menu, click on “Load Balancers” under the “Load Balancing” section

- Click the blue “Create load balancer” button at the top

- On the “Select load balancer type” page, choose “Application Load Balancer” and click “Create”

- For the basic configuration:

- Name: Enter

multi-app-albas your load balancer name - Scheme: Select “Internet-facing” to make it accessible from the internet

- IP address type: Choose “IPv4” (the default option)

- Name: Enter

- For Listeners:

- Confirm the default HTTP:80 listener is present (you can add HTTPS:443 if needed)

- For Availability Zones:

- Select your VPC from the dropdown

- Choose at least two availability zones and select their public subnets

- Selecting multiple AZs creates redundancy for high availability

- Click “Next: Configure Security Settings” (or skip if not using HTTPS)

- For Security groups:

- Either create a new security group or select an existing one

- Ensure it allows inbound HTTP (port 80) traffic from your desired sources (0.0.0.0/0 for public)

- Click “Next: Configure Routing” (but we’ll create target groups separately first)

- For now, just click through the remaining steps using any default target group

- You can complete the ALB creation process and update listeners afterward

-

Create First Target Group:

- Return to the EC2 dashboard in AWS Management Console

- In the left navigation pane, click on “Target Groups” under the “Load Balancing” section

- Click the “Create target group” button

- For the basic settings:

- Choose a target type: Select “Instances” (EC2 instances are the most common target)

- Target group name: Enter

app1-target-group - Protocol: Select “HTTP”

- Port: Enter “80”

- VPC: Select your VPC from the dropdown menu

- For the Health check settings:

- Protocol: Leave as HTTP

- Path: Enter

/app1/health(this is the endpoint that will be checked to determine if instances are healthy) - Advanced health check settings (optional):

- Port: Leave as “traffic port” (uses the same port as your target, 80)

- Healthy threshold: Set to 2 (number of consecutive successful checks to consider target healthy)

- Unhealthy threshold: Set to 2 (number of failed checks to consider target unhealthy)

- Timeout: Set to 5 seconds (time to wait for a response)

- Interval: Set to 30 seconds (time between health checks)

- Success codes: Leave as “200” (HTTP status code indicating success)

- Click “Next” to proceed to “Register targets”

- Under “Available instances”, select the EC2 instances that will serve App1

- Click “Include as pending below” to add them to the target group

- Review the selected instances in the “Review targets” section

- Click “Create target group” to finalize

-

Create Second Target Group:

- Repeat the process to create another target group

- For the basic settings:

- Choose a target type: Select “Instances”

- Target group name: Enter

app2-target-group - Protocol: Select “HTTP”

- Port: Enter “80”

- VPC: Select the same VPC you used for the first target group

- For the Health check settings:

- Protocol: Leave as HTTP

- Path: Enter

/app2/health(a different health check path for this application) - Keep the same advanced settings as the first target group, or adjust as needed

- Click “Next” to proceed to “Register targets”

- Select the EC2 instances that will serve App2 (these may be different from App1 instances)

- Complete the target group creation process

- Note: If using the same instances for both apps, make sure each instance has both /app1/health and /app2/health endpoints

Task 2: Configure Routing Rules

Steps:

-

Access Listener Rules:

- Return to the EC2 dashboard in the AWS Management Console

- Click on “Load Balancers” in the left navigation menu

- Select your newly created

multi-app-albfrom the list - In the bottom section, click on the “Listeners” tab to view all listeners

- Find the HTTP:80 listener in the list and click the “View/edit rules” link in the “Rules” column

- This will open the rules editor interface where you can manage path-based routing

-

Create Path-based Rules:

- In the rules editor, you’ll see a visual interface for managing rules

- By default, there’s a single default rule that forwards all traffic to the default target group

- To add your first rule, click the “+” icon (Insert Rule) in the appropriate position

-

For the first rule:

- Click “Add condition” and select “Path” from the dropdown

- For the path pattern, enter

/app1*or for more specificity/app1/* - Wildcards (*) match any characters, so this will route any URL starting with /app1

- Click “Add action” and select “Forward to target groups”

- Select your

app1-target-groupfrom the dropdown - Click the checkmark icon to save this rule

- To add the second rule, click the “+” icon again in the appropriate position

-

For the second rule:

- Click “Add condition” and select “Path” from the dropdown

- For the path pattern, enter

/app2*or for more specificity/app2/* - Click “Add action” and select “Forward to target groups”

- Select your

app2-target-groupfrom the dropdown - Click the checkmark icon to save this rule

-

For the default rule (which will match any requests that don’t match the above rules):

- You can either:

- Leave it pointing to your original target group

- Modify it to forward to a “default” target group if you’ve created one

- Change it to return a fixed response (e.g., a 404 Not Found status code)

- You can either:

-

Understanding Rule Priority Logic:

- Rules are evaluated in order of their priority number (lowest number = highest priority)

- When you added rules using the visual editor, they were automatically assigned priorities

- You can explicitly set rule priorities by clicking the edit icon next to the rule number

- Best practice is to leave gaps between priority numbers (e.g., 10, 20, 30) to make it easier to insert rules later

- Only the first matching rule is applied - subsequent matching rules are ignored

- To reorder rules, click “Reorder rules” and drag them to the desired positions

- Click “Update” to save your priority changes

- Adding Advanced Conditions (Optional):

- Path-based routing can be combined with other conditions for more complex routing rules:

- Host header: Route based on domain name (e.g., api.example.com vs. www.example.com)

- HTTP headers: Route based on specific header values

- Query strings: Route based on URL parameters

- Source IP: Route based on client IP address

- To create a combined rule:

- Click “+” to add a new rule

- Add multiple conditions (e.g., both Path and Host header)

- A request must match ALL conditions for the rule to apply

- Example: Route requests to /api/* but only from api.example.com domain

- Click the checkmark icon to save your advanced rule

- Path-based routing can be combined with other conditions for more complex routing rules:

-

Prepare and Test Applications:

-

Configure Your Backend Applications:

- SSH into each EC2 instance that will host both applications:

ssh -i "your-key.pem" ubuntu@instance-public-ip- Create directory structure for each application:

# Create directories for both applications sudo mkdir -p /var/www/html/app1 sudo mkdir -p /var/www/html/app2 # Set proper ownership sudo chown -R www-data:www-data /var/www/html/app1 sudo chown -R www-data:www-data /var/www/html/app2- Create the health check endpoint for App1:

sudo tee /var/www/html/app1/health > /dev/null << EOF <!DOCTYPE html> <html> <head> <title>App1 Health Check</title> </head> <body> <h1>App1 is healthy</h1> </body> </html> EOF- Create a sample App1 homepage:

sudo tee /var/www/html/app1/index.html > /dev/null << EOF <!DOCTYPE html> <html> <head> <title>Application 1</title> </head> <body> <div class="container"> <h1>Welcome to Application 1</h1> <p>This content is served by target group app1-target-group</p> <p>Server: $(hostname)</p> <p>Path: /app1/</p> </div> </body> </html> EOF- Create the health check endpoint for App2:

sudo tee /var/www/html/app2/health > /dev/null << EOF <!DOCTYPE html> <html> <head> <title>App2 Health Check</title> </head> <body> <h1>App2 is healthy</h1> </body> </html> EOF- Create a sample App2 homepage:

sudo tee /var/www/html/app2/index.html > /dev/null << EOF <!DOCTYPE html> <html> <head> <title>Application 2</title> </head> <body> <div class="container"> <h1>Welcome to Application 2</h1> <p>This content is served by target group app2-target-group</p> <p>Server: $(hostname)</p> <p>Path: /app2/</p> </div> </body> </html> EOF- Configure Nginx to handle path-based routing properly:

sudo tee /etc/nginx/sites-available/default > /dev/null << EOF server { listen 80 default_server; listen [::]:80 default_server; root /var/www/html; index index.html index.htm; # For root path location / { try_files \$uri \$uri/ =404; } # For App1 path location /app1/ { alias /var/www/html/app1/; try_files \$uri \$uri/ /app1/index.html; } # For App1 health check location = /app1/health { alias /var/www/html/app1/health; } # For App2 path location /app2/ { alias /var/www/html/app2/; try_files \$uri \$uri/ /app2/index.html; } # For App2 health check location = /app2/health { alias /var/www/html/app2/health; } } EOF- Test and reload Nginx configuration:

# Verify nginx configuration syntax sudo nginx -t # If the test passes, reload nginx to apply changes sudo systemctl reload nginx- Repeat these steps for all EC2 instances in your target groups

-

Test Direct Access to Applications:

- Before testing the load balancer routing, verify each application works directly:

- Try accessing the health check endpoint directly on each EC2 instance:

http://instance-public-ip/app1/healthhttp://instance-public-ip/app2/health

- Try accessing the application pages directly:

http://instance-public-ip/app1/http://instance-public-ip/app2/

- Verify that the correct content is served for each path on each instance

- If any issues occur, check the nginx error logs:

sudo tail -f /var/log/nginx/error.log -

Test Load Balancer Routing:

- Get your ALB’s DNS name from the EC2 console:

- EC2 > Load Balancers > select

multi-app-alb> Description tab - Look for the “DNS name” field (e.g.,

multi-app-alb-1234567890.us-east-1.elb.amazonaws.com)

- EC2 > Load Balancers > select

- Test accessing App1 through the load balancer:

- Open a web browser and navigate to

http://your-alb-dns-name/app1/ - You should see the App1 homepage content

- Refresh several times and verify the server hostname changes if you have multiple instances

- Open a web browser and navigate to

- Test accessing App2 through the load balancer:

- Navigate to

http://your-alb-dns-name/app2/ - You should see the App2 homepage content

- Again, refresh several times to verify load balancing is working

- Navigate to

- Test the default rule:

- Navigate to

http://your-alb-dns-name/or any other non-matching path - Verify it behaves as expected based on your default rule configuration

- Navigate to

- Get your ALB’s DNS name from the EC2 console:

-

Monitor Target Health:

- In the EC2 console, go to Target Groups

- Select

app1-target-groupand click the “Targets” tab - Verify all instances show “Healthy” status

- Repeat for

app2-target-group - If any targets show “Unhealthy” status:

- Check that the health check path exists (/app1/health or /app2/health)

- Verify nginx is properly configured and running

- Check security groups to ensure the ALB can reach your instances on port 80

- Review the target group settings to ensure the health check is configured correctly

- Test Fault Tolerance:

- To verify high availability:

- Stop one of the EC2 instances in your target group

- Observe in the target group that the instance transitions to “Unhealthy”

- Test accessing the application - requests should be routed only to healthy instances

- Restart the stopped instance

- Observe it returning to “Healthy” status and rejoining the rotation

- To verify high availability:

- To edit the default rule, click the edit (pencil) icon and make your desired changes

-

-

Set Rule Priority:

- In the rules editor, rules are evaluated in numeric order (lowest number first)

- You can reorder rules by dragging them up or down in the interface

- More specific rules (like exact path matches) should have higher priority than general rules

- To manually adjust priorities, click on the rule’s current priority number to edit it

- After arranging all rules, click “Update” at the bottom to save your changes

- Rules priority is crucial - if a request could match multiple rules, the one with highest priority (lowest number) will be applied

Task 3: Prepare and Test Applications

Steps:

-

Configure Application Servers:

- First, connect to your EC2 instance that will host App1:

# Connect to your EC2 instance via SSH # Replace with your key file and actual public IP address ssh -i "your-key.pem" ubuntu@ec2-instance-public-ip- Configure the first application (App1):

# Create the directory structure for App1 sudo mkdir -p /var/www/html/app1 sudo mkdir -p /var/www/html/app1/health # Create the main index.html file with a distinctive App1 theme sudo tee /var/www/html/app1/index.html > /dev/null << EOF <!DOCTYPE html> <html> <head> <title>App 1</title> </head> <body> <div class="container"> <h1>Application 1</h1> <div class="app-label">APP1</div> <p>This request was routed to App 1 based on the path.</p> <p>Server time: \$(date)</p> <p>Instance ID: \$(curl -s http://169.254.169.254/latest/meta-data/instance-id || echo "Unknown")</p> </div> </body> </html> EOF # Create a simple health check file that returns OK # The ALB will use this to confirm the application is running sudo tee /var/www/html/app1/health/index.html > /dev/null << EOF OK - App1 is healthy EOF # Set proper permissions sudo chown -R www-data:www-data /var/www/html/app1- Now configure the second application (App2) on the same or different instance:

# Create the directory structure for App2 sudo mkdir -p /var/www/html/app2 sudo mkdir -p /var/www/html/app2/health # Create the main index.html file with a distinctive App2 theme sudo tee /var/www/html/app2/index.html > /dev/null << EOF <!DOCTYPE html> <html> <head> <title>App 2</title> </head> <body> <div class="container"> <h1>Application 2</h1> <div class="app-label">APP2</div> <p>This request was routed to App 2 based on the path.</p> <p>Server time: \$(date)</p> <p>Instance ID: \$(curl -s http://169.254.169.254/latest/meta-data/instance-id || echo "Unknown")</p> </div> </body> </html> EOF # Create a simple health check file for App2 sudo tee /var/www/html/app2/health/index.html > /dev/null << EOF OK - App2 is healthy EOF # Set proper permissions sudo chown -R www-data:www-data /var/www/html/app2 # Restart the web server to apply changes sudo systemctl restart nginx || sudo systemctl restart apache2 -

Test Routing Configuration:

- After configuring both applications and ensuring the ALB is properly set up:

- Open your web browser and locate your ALB’s DNS name, which you can find:

- In the EC2 console under Load Balancers

- In the Description tab of your ALB’s details page

- It will look like

multi-app-alb-1234567890.ap-south-1.elb.amazonaws.com

- Test App1 routing:

- Enter the URL:

http://your-alb-dns-name/app1/(replacing “your-alb-dns-name” with your actual ALB DNS) - You should see the blue-themed App1 page with “Application 1” heading

- If you see a 404 error or wrong content, check:

- App1 directory exists at /var/www/html/app1/ on your server

- Your ALB rule for /app1/* is correctly configured

- The target group health check is passing

- Enter the URL:

- Test App2 routing:

- Enter the URL:

http://your-alb-dns-name/app2/ - You should see the red-themed App2 page with “Application 2” heading

- Different styling confirms you’re seeing different applications

- Enter the URL:

- Test the default rule:

- Try accessing the root URL:

http://your-alb-dns-name/ - The behavior depends on your default rule configuration:

- It might show a default app, a 404 error, or redirect

- Try accessing the root URL:

- Test an invalid path:

- Try

http://your-alb-dns-name/app3/ - This should follow your default rule’s behavior

- Try

-

Validate with Command Line:

- If you’re troubleshooting or need to automate testing, use the curl command:

# Check HTTP headers for App1 curl -I http://your-alb-dns-name/app1/ # Should show HTTP/1.1 200 OK if working properly # Check HTTP headers for App2 curl -I http://your-alb-dns-name/app2/ # View full content of App1 curl http://your-alb-dns-name/app1/ # View full content of App2 curl http://your-alb-dns-name/app2/ # Test edge cases curl -I http://your-alb-dns-name/ curl -I http://your-alb-dns-name/app1/subpath/ # Check health check endpoints (should return OK) curl http://your-alb-dns-name/app1/health/ curl http://your-alb-dns-name/app2/health/- To run a simple load test to verify ALB functionality:

# Install Apache Bench if not already installed sudo apt update && sudo apt install -y apache2-utils # Send 100 requests with 10 concurrent connections ab -n 100 -c 10 http://your-alb-dns-name/app1/ ab -n 100 -c 10 http://your-alb-dns-name/app2/

This path-based routing configuration allows you to host multiple applications or services under a single domain name, simplifying your architecture and reducing costs by sharing a single load balancer.

Pro Tip: If you’re setting this up for production (and I hope you are!), don’t skip on security. Take a few extra minutes to configure HTTPS listeners with SSL/TLS certificates from AWS Certificate Manager (they’re free!). While you’re at it, set up an HTTP to HTTPS redirect so your users are always on the secure version of your site. In today’s world, encryption isn’t optional – it’s essential.

Advanced AWS Networking and Services

What You’ll Learn

- Creating secure network architectures with public and private subnets

- Setting up secure access to private instances using a bastion host

- Implementing NAT Gateway for outbound internet access

- Configuring security groups for proper access control

Now we’re going to dive into the really good stuff – the advanced networking concepts that truly form the backbone of secure and scalable AWS infrastructures. If you’ve been following along, you’re about to level up your AWS skills with proper network segmentation and secure access patterns. These are the techniques that separate AWS hobbyists from true cloud architects!

Key Concepts

| Concept | Description |

|---|---|

| VPC (Virtual Private Cloud) | Your own isolated section of the AWS cloud |

| Subnets | Segments of your VPC’s IP address range with different accessibility levels |

| NAT Gateway | Allows instances in private subnets to access the internet while preventing inbound access |

| Internet Gateway | Enables communication between your VPC and the internet |

| Route Tables | Control the traffic flow within your VPC and to external networks |

| Security Groups | Virtual firewalls that control inbound and outbound traffic at the instance level |

| Bastion Host | A secure instance that serves as a gateway for accessing private resources |

Task 1: Create VPC and Subnet Architecture

graph TD

subgraph "secure-vpc (10.0.0.0/16)"

subgraph "Public Subnet (10.0.1.0/24)"

BH[Bastion Host]

IGW[Internet Gateway]

NAT[NAT Gateway]

end

subgraph "Private Subnet (10.0.2.0/24)"

APP[Application Servers]

DB[Database Servers]

end

IGW <-->|Public Traffic| Internet((Internet))

BH -->|SSH Access| APP

APP -->|Outbound Traffic| NAT

NAT -->|Route| IGW

APP <-->|Internal Traffic| DB

end

Admin([Admin User]) -->|SSH| BH

classDef public fill:#81D4FA,stroke:#0277BD,color:black;

classDef private fill:#FFE0B2,stroke:#E65100,color:black;

classDef external fill:#ECEFF1,stroke:#607D8B,color:black;

class BH,IGW,NAT public;

class APP,DB private;

class Internet,Admin external;

Steps:

-

Create VPC:

- Sign in to the AWS Management Console at https://console.aws.amazon.com

- In the search bar at the top, type “VPC” and select the “VPC” service from the dropdown

- In the left navigation panel, click on “Your VPCs” under “Virtual private cloud”

- Click the orange “Create VPC” button in the top-right corner

- For VPC settings, select “VPC only” (not VPC and more)

- Enter a name tag:

secure-vpc(this helps identify your VPC in the AWS console) - Enter the IPv4 CIDR block:

10.0.0.0/16(this provides up to 65,536 IP addresses) - Leave the IPv6 CIDR block as “No IPv6 CIDR block” unless specifically needed

- Keep the Tenancy as “Default” (dedicated tenancy costs significantly more)

- Click “Create VPC” at the bottom of the page

- You’ll see a success message when complete - click “Close” to continue

- Verify your VPC appears in the VPC list with the correct CIDR block and name

-

Create Subnets:

- In the left navigation panel of the VPC Dashboard, click on “Subnets”

- Click the orange “Create subnet” button in the top-right corner

- For VPC ID, select the

secure-vpcyou just created from the dropdown - Under “Subnet settings”, you’ll create your first subnet:

- Subnet name: Enter

public-subnet-1 - Availability Zone: Select the first AZ in the list (e.g., ap-south-1a if in Mumbai region)

- IPv4 CIDR block: Enter

10.0.1.0/24(this provides 256 IP addresses)

- Subnet name: Enter

- Click “Add new subnet” to add another subnet to this creation process

- Enter details for your second subnet:

- Subnet name: Enter

private-subnet-1 - Availability Zone: Select the same AZ as the public subnet for simplicity (e.g., ap-south-1a)

- IPv4 CIDR block: Enter

10.0.2.0/24(this provides another 256 IP addresses)

- Subnet name: Enter

- Click “Create subnet” at the bottom of the page

- Verify both subnets appear in the subnet list with correct settings

- Important: By default, new subnets do not automatically assign public IP addresses to instances

- For the public subnet, enable auto-assign public IP:

- Select

public-subnet-1from the list - Click “Actions” button and select “Edit subnet settings”

- Check the box for “Enable auto-assign public IPv4 address”

- Click “Save”

- Select

-

Create Internet Gateway:

- In the left navigation panel of the VPC Dashboard, click on “Internet Gateways”

- Click the “Create internet gateway” button

- Enter a name tag:

secure-vpc-igw - Click “Create internet gateway”

- After creation, you’ll see a message that the Internet Gateway is detached

- Click the “Actions” button and select “Attach to VPC”

- Select your

secure-vpcfrom the dropdown - Click “Attach internet gateway”

- Verify the State changes to “Attached” in the Internet Gateway list

-

Create NAT Gateway:

- In the left navigation panel of the VPC Dashboard, click on “NAT Gateways”

- Click the “Create NAT gateway” button

- Configure the following settings:

- Name: Enter

secure-vpc-nat - Subnet: Select

public-subnet-1from the dropdown (NAT gateway must be in a public subnet) - Connectivity type: Select “Public” (allows instances in private subnets to access the internet)

- Elastic IP allocation ID: Click “Allocate Elastic IP” button (this creates a new static IP)

- You’ll see a new Elastic IP has been allocated

- Name: Enter

- Click “Create NAT gateway”

- Note: NAT Gateways incur charges by the hour plus data processing fees

- The status will initially show “Pending” - it takes about 5 minutes to become “Available”

- Wait until the NAT Gateway status changes to “Available” before proceeding

Task 2: Configure Routing

Steps:

-

Create and Configure Public Route Table:

- In the left navigation panel of the VPC Dashboard, click on “Route Tables”

- Click the “Create route table” button

- Configure the following settings:

- Name tag: Enter

public-route-table - VPC: Select

secure-vpcfrom the dropdown

- Name tag: Enter

- Click “Create route table”

- After creation, select the newly created

public-route-tablefrom the list - At the bottom of the page, click on the “Routes” tab

- Click “Edit routes” button

- You’ll see the local route is already created (10.0.0.0/16 pointing to local)

- Click “Add route” to add a new route

- Enter the following settings:

- Destination: Enter

0.0.0.0/0(this represents all internet traffic) - Target: Select “Internet Gateway” and choose

secure-vpc-igwfrom the dropdown

- Destination: Enter

- Click “Save changes”

- Now, click on the “Subnet associations” tab

- Click “Edit subnet associations” button

- Check the box next to

public-subnet-1to associate it with this route table - Click “Save associations”

- This association means that traffic from instances in the public subnet will use this route table

- Verify the subnet is now listed under “Explicit subnet associations”

-

Create and Configure Private Route Table:

- Click “Route Tables” in the left navigation panel again

- Click the “Create route table” button

- Configure the following settings:

- Name tag: Enter

private-route-table - VPC: Select

secure-vpcfrom the dropdown

- Name tag: Enter

- Click “Create route table”

- After creation, select the newly created

private-route-tablefrom the list - At the bottom of the page, click on the “Routes” tab

- Click “Edit routes” button

- Click “Add route” button

- Enter the following settings:

- Destination: Enter

0.0.0.0/0(this represents all internet traffic) - Target: Select “NAT Gateway” and choose the NAT Gateway you created earlier (

secure-vpc-nat)

- Destination: Enter

- Click “Save changes”

- Now, click on the “Subnet associations” tab

- Click “Edit subnet associations” button

- Check the box next to

private-subnet-1to associate it with this route table - Click “Save associations”

- This association allows instances in the private subnet to access the internet through the NAT Gateway while remaining private

-

Verify the subnet is now listed under “Explicit subnet associations”

- To confirm your route tables are set up correctly, check that:

- The

public-route-tablehas a local route (10.0.0.0/16) and an internet gateway route (0.0.0.0/0) - The

private-route-tablehas a local route (10.0.0.0/16) and a NAT gateway route (0.0.0.0/0) - Each route table is associated with the correct subnet

- The

Task 3: Launch and Configure EC2 Instances

Steps:

-

Launch Bastion Host:

- In your AWS Management Console, navigate to the EC2 service (search “EC2” in the search bar)

- In the EC2 Dashboard, click on “Instances” in the left navigation pane

- Click the orange “Launch instances” button to begin the instance creation process

- Instance Configuration - Name and tags:

- Enter

bastion-hostin the “Name” field - Optionally add additional tags like Environment=Dev, Role=Bastion for better organization

- Enter

- Application and OS Images (Amazon Machine Image):

- In the “Quick Start” tab, select “Ubuntu”

- From the Ubuntu AMI list, select “Ubuntu Server 22.04 LTS (HVM), SSD Volume Type”

- Verify the architecture shows as “64-bit (x86)”

- Instance type:

- Select “t2.micro” from the list (this is free tier eligible)

- You can use the search box to filter for “t2.micro” if needed

- Key pair (login):

- If you have an existing key pair you want to use, select it from the dropdown

- If you need a new key pair, click “Create new key pair”

- Enter a name like

vpc-key-pair - Keep Key pair type as “RSA”

- Keep Private key file format as “.pem” (or select “.ppk” if using PuTTY on Windows)

- Click “Create key pair”

- Important: The private key file (.pem) will automatically download - store it securely as you cannot download it again

- Enter a name like

- Network settings:

- Click “Edit” to expand the network settings section

- VPC: Select

secure-vpcfrom the dropdown - Subnet: Select

public-subnet-1from the dropdown (this is your public subnet) - Auto-assign public IP: Select “Enable” to ensure the instance gets a public IP

- Firewall (security groups): Select “Create security group”

- Security group name: Enter

bastion-sg - Description: Enter “Security group for bastion host”

- For the security rule:

- Keep Type as “SSH”

- Source type: Select “My IP” (this automatically adds your current IP address)

- Description: Enter “SSH access from my IP only”

- Configure storage:

- Keep the default storage settings (8 GB gp2 volume)

- Advanced details:

- No changes needed for basic setup

- Summary:

- Review all your settings

- Click “Launch instance” in the right panel

- You’ll see a success message with your instance ID

- Click “View all instances” to return to the instances page

- Wait for the “Instance state” to change to “Running” and “Status check” to show “2/2 checks passed”

- Make note of the public IP address displayed in the instance details

-

Launch Private Instance:

- From the EC2 Dashboard, click “Launch instances” again

- Instance Configuration - Name and tags:

- Enter

private-instancein the “Name” field

- Enter

- Application and OS Images:

- Select the same Ubuntu Server 22.04 LTS image as before

- Instance type:

- Select “t2.micro” again

- Key pair:

- Very important: Select the SAME key pair that you used for the bastion host

- This allows you to use the same private key when accessing through the bastion

- Network settings:

- Click “Edit” to expand the network settings section

- VPC: Select

secure-vpcfrom the dropdown - Subnet: Select

private-subnet-1from the dropdown (this is your private subnet) - Auto-assign public IP: Select “Disable” (private instances shouldn’t have public IPs)

- Firewall (security groups): Select “Create security group”

- Security group name: Enter

private-instance-sg - Description: Enter “Security group for private instance”

- For the security rule:

- Keep Type as “SSH”

- Source type: Select “Custom”

- For Source, we need to reference the bastion’s security group:

- Click in the Source field and wait for the dropdown to appear

- Select the

bastion-sgsecurity group from the list

- Description: Enter “SSH access from bastion host only”

- Configure storage:

- Keep the default storage settings (8 GB gp2 volume)

- Advanced details:

- No changes needed for basic setup

- Summary:

- Review all your settings

- Click “Launch instance” in the right panel

- Wait for the instance to reach “Running” state and pass its status checks

- Make note of the private IP address displayed in the instance details (will look like 10.0.2.X)

- Notice that this instance only has a private IP address and no public IP

Task 4: Test Secure Connectivity

Steps:

-

Transfer SSH Key to Bastion:

- Open your terminal (Command Prompt, PowerShell, Terminal, etc.) on your local computer

- Navigate to the directory where your key pair file (.pem) is stored

- Run the following command to copy your key pair to the bastion host:

# Replace with your actual key filename and bastion's public IP # For example: scp -i vpc-key-pair.pem vpc-key-pair.pem [email protected]:~/ scp -i your-key.pem your-key.pem ubuntu@bastion-public-ip:~/- If you get a warning about the host authenticity, type “yes” to continue

- You should see output indicating the transfer progress and completion

- Note: On Windows, you might need to adjust file permissions first if using PowerShell:

# For Windows PowerShell users only icacls.exe "your-key.pem" /inheritance:r /grant:r "$($env:USERNAME):(R)" -

Connect to Bastion Host:

- In your terminal, connect to the bastion host using SSH:

# Replace with your actual key filename and bastion's public IP # For example: ssh -i vpc-key-pair.pem [email protected] ssh -i your-key.pem ubuntu@bastion-public-ip- If successful, you’ll see a welcome message and a Ubuntu command prompt

- You are now logged into the bastion host in the public subnet

-

Set Proper Permissions on Key File in Bastion Host:

- While logged into the bastion host, run the following command to secure your key file:

# Change permissions to make the private key secure # Without this step, SSH will refuse to use the key file due to insecure permissions chmod 400 ~/your-key.pem- The command restricts permissions so only you (the owner) can read the file

- This is a security requirement for SSH private keys

-

Connect to Private Instance from Bastion:

- Now you’ll use the bastion host as a jump server to connect to your private instance

- While still logged into the bastion host, run:

# Replace with your private instance's private IP (should start with 10.0.2.X) # For example: ssh -i ~/vpc-key-pair.pem [email protected] ssh -i ~/your-key.pem ubuntu@private-instance-private-ip- If successful, you’ll see a new welcome message and command prompt

- You are now logged into the private instance that doesn’t have direct internet access

- To confirm you’re on the private instance, you can check its private IP:

hostname -I- The IP should match the private IP you noted earlier

-

Verify Internet Access through NAT Gateway:

- While logged into the private instance, test outbound internet connectivity:

# This sends 4 ping packets to Google's DNS server ping -c 4 8.8.8.8 # Try accessing Google by domain name ping -c 4 google.com-

You should see successful ping responses, indicating that:

- The private instance can access the internet (outbound)

- DNS resolution is working properly

- The NAT Gateway is correctly routing traffic

-

Also test downloading a file to verify HTTP traffic:

# Try downloading a file from the internet wget -q --spider http://example.com echo $? # Should return 0 if successful- A return value of 0 confirms successful HTTP connectivity

-

Verify Security Configuration:

- Try to access the private instance directly from your local machine (this should fail):

# Open a new terminal window on your local machine # Try to SSH directly to the private instance using its private IP ssh -i your-key.pem ubuntu@private-instance-private-ip-

This connection attempt should time out, confirming that:

- The private instance is not directly accessible from the internet

- You must go through the bastion host for access

- Your security architecture is working as designed

-

Return to your bastion connection and exit both SSH sessions:

# Exit from the private instance exit # Exit from the bastion host exit

This secure network architecture you’ve created demonstrates the “defense in depth” principle. The bastion host acts as a secure entry point, while private instances remain protected from direct internet access. Meanwhile, the NAT Gateway enables those private instances to still access external resources when needed.

This secure network architecture forms the foundation for hosting applications that require different levels of security and internet accessibility, while maintaining the ability to manage all resources.

Pro Tip: Use AWS Systems Manager Session Manager as an alternative to bastion hosts for connecting to private instances without the need to manage SSH keys or open inbound SSH ports in your security groups.

End-to-End Domain Configuration

- Registering and configuring a domain name in Route 53

- Setting up DNS records to route traffic to your AWS resources in the ap-south-1 region

- Implementing HTTPS with AWS Certificate Manager

- Creating custom domain endpoints for multi-port applications

This section guides you through the complete process of configuring a custom domain for your AWS applications, from registration to secure certificate implementation.

graph TD

User([Internet User]) -->|"1. DNS Query

example.com"| DNS[DNS Resolvers]

DNS -->|"2. Get NS Records"| Root[Root DNS Servers]

Root -->|"3. NS Records"| DNS

DNS -->|"4. Query NS Server"| R53[Route 53 Name Servers]

subgraph "AWS Cloud"

R53 -->|"5. A/ALIAS Record"| DNS

subgraph "Domain Configuration"

HZ[Hosted Zone

example.com]

Records["DNS Records:

- A record (www → IP)

- ALIAS (apex → ALB)

- MX (mail servers)

- TXT (verification)"]

Cert[SSL/TLS Certificate

ACM]

end

ALB[Application Load Balancer] -->|Handle HTTPS| Web[Web Servers]

API[API Gateway] --> Lambda[Lambda Functions]

CF[CloudFront] --> S3[S3 Static Content]

end

DNS -->|"6. Response with IP"| User

User -->|"7. HTTPS Request"| ALB

User -->|"7. HTTPS Request"| API

User -->|"7. HTTPS Request"| CF

HZ --- Records

Records --> ALB

Records --> API

Records --> CF

Cert --> ALB

Cert --> API

Cert --> CF

classDef external fill:#ECEFF1,stroke:#607D8B,color:black;

classDef route53 fill:#8ED1FC,stroke:#0693E3,color:black;

classDef dns fill:#ABE9CD,stroke:#00D084,color:black;

classDef endpoints fill:#FF9900,stroke:#E65100,color:white;

classDef backends fill:#F78DA7,stroke:#CF2E2E,color:white;

class User,DNS,Root external;

class R53,HZ,Records route53;

class Cert dns;

class ALB,API,CF endpoints;

class Web,Lambda,S3 backends;

Key Concepts

| Concept | Description |

|---|---|

| Record Sets | DNS records including A, CNAME, MX, TXT, etc. |

| AWS Certificate Manager (ACM) | Service for provisioning and managing SSL/TLS certificates |

| Alias Record | Special Route 53 record that routes traffic to AWS resources |

| Health Check | Route 53 feature to monitor endpoint health and route traffic accordingly |

Task 1: Register and Configure a Domain

Steps:

-

Register a Domain with Route 53:

- Sign in to the AWS Management Console and navigate to Route 53 service

- In the Route 53 dashboard, click on “Domains” in the left navigation panel

- Click on “Registered domains” and then click the “Register Domain” button

- In the domain registration page:

- Enter your desired domain name in the search box (e.g., yourdomain.com)

- Select the domain extension you want (.com, .net, .org, etc.)

- Click “Check” to verify availability

- If your desired domain is available, click “Add to cart”

- If it’s not available, you’ll see alternative suggestions - select one or try a different name

- Review the selected domain and pricing information

- Click “Continue” to proceed

- Complete the contact information form:

- Enter accurate contact details (first name, last name, organization, address, etc.)

- This information becomes publicly available in the WHOIS database unless you add privacy protection

- Select “Yes” for “Privacy Protection” (usually included free with AWS domains)

- Review the Terms and Conditions, check the agreement box

- Click “Continue” to proceed to payment

- Review your order details and cost (domains typically range from $9-$15 per year)

- Click “Complete Order” to finalize your purchase

- You’ll see a confirmation page with your order details

- Important: Domain registration typically takes 24-48 hours to complete

- You’ll receive an email confirmation when your domain is successfully registered

- You can check the status anytime on the “Registered domains” page in Route 53

-

Create a Hosted Zone:

- After your domain registration is complete (or if using an external registrar):

- In the Route 53 dashboard, click on “Hosted zones” in the left navigation panel

- Click the “Create hosted zone” button

- Enter your domain name (e.g., yourdomain.com) in the “Domain name” field

- Add a description (optional but recommended) like “Main hosting zone for yourdomain.com”

- For “Type”, select “Public hosted zone” (this makes your DNS records accessible from the internet)

- You can leave the Tags section empty or add tags if you have an organizational tagging strategy

- Click “Create hosted zone” button at the bottom

- After creation, you’ll see the zone details page with automatically created NS (Name Server) and SOA records

- The NS record contains four name servers that AWS has assigned to your domain

-

Configure Name Servers:

- If you registered your domain with Route 53, the name servers are automatically configured

- If you’re using an external domain registrar (like GoDaddy, Namecheap, etc.), you need to update their name

servers:

- In the Route 53 console, click on your newly created hosted zone

- Look for the “NS” (Name Server) record in the records list

- Note down all four name server addresses (they look like ns-1234.awsdns-56.org)

- Log in to your external domain registrar’s website

- Navigate to the domain management or DNS settings section

- Find the option to edit name servers or DNS settings

- Replace the current name servers with the four Route 53 name servers you noted

- Save your changes

- Important: DNS propagation can take up to 48 hours, though it often completes within a few hours

- You can verify propagation using online DNS lookup tools by checking if the name servers have been updated

- During propagation, DNS resolution may be inconsistent as global DNS servers update their records

Task 2: Create SSL/TLS Certificate

Steps:

-

Request Certificate:

- Sign in to the AWS Management Console and ensure you’re in the ap-south-1 region

- Check the region in the top-right corner of the console

- If needed, click on the region name and select “Asia Pacific (Mumbai) ap-south-1” from the dropdown

- Important: Certificates used with CloudFront must be in us-east-1 region, but for ALB in ap-south-1, request the certificate in that region

- Search for “Certificate Manager” in the search bar and select the ACM service

- In the AWS Certificate Manager dashboard, click the “Request a certificate” button

- On the “Request a certificate” page, select “Request a public certificate” and click “Next”

- In the “Domain names” section:

- In the first field, enter your domain name (e.g.,

yourdomain.com) - Click “Add another name to this certificate”

- In the second field, enter a wildcard domain (e.g.,

*.yourdomain.com) - This wildcard covers all subdomains (www, api, mail, etc.)

- In the first field, enter your domain name (e.g.,

- Under “Validation method”, select “DNS validation”

- DNS validation is recommended as it allows automatic renewal

- Email validation requires manual action every renewal period

- Under “Key algorithm”, leave the default “RSA 2048” unless you have specific requirements

- Add tags if desired (optional)

- Click “Request” to submit your certificate request

- You’ll be redirected to the certificates list where your new certificate will show “Pending validation” status

- Sign in to the AWS Management Console and ensure you’re in the ap-south-1 region

-

Validate Certificate:

- On the certificates page, click on your pending certificate to view its details

- In the certificate details page, you’ll see a “Domains” section listing both your domain entries

- Next to each domain name, you’ll see “Create records in Route 53” buttons

- For each domain in the list:

- Click the “Create records in Route 53” button

- A dialog will appear showing the CNAME record that will be created

- Click “Create records” to automatically add the validation records to your Route 53 hosted zone

- Repeat for each domain name listed

- You’ll see success messages confirming the creation of each record

- After creating the validation records:

- Certificate validation typically takes 5-30 minutes to complete

- You don’t need to take any further action during validation

- You can monitor the certificate status on the ACM console

- Refresh the page periodically to check progress

- Wait for the certificate status to change from “Pending validation” to “Issued”

- Once the status changes to “Issued”, your certificate is ready to use

- Important certificate details to note:

- The certificate ARN (Amazon Resource Name) - you’ll need this when configuring HTTPS for your load balancer

- The expiration date - AWS will automatically renew the certificate if the DNS validation records remain in place

- The “Domain” section showing all secured domains

- AWS ACM certificates are valid for 13 months and auto-renew as long as the DNS validation records exist

Task 3: Set Up DNS Records for Your Resources

Steps:

-

Create Record for Web Application ALB:

- Sign in to the AWS Management Console and navigate to the Route 53 service

- In the Route 53 dashboard, click on “Hosted zones” in the left navigation panel

- Click on your domain name from the list of hosted zones

- On your domain’s hosted zone page, click the “Create record” button

- For the basic record configuration:

- In the “Record name” field:

- For a subdomain, enter

www(this will create www.yourdomain.com) - For the apex/root domain, leave this field empty (this will create yourdomain.com)

- For a subdomain, enter

- Record type: Select “A - Routes traffic to an IPv4 address and some AWS resources”

- In the “Record name” field:

- In the “Route traffic to” section:

- Choose “Alias to Application and Classic Load Balancer”

- Select your region from the dropdown (e.g., ap-south-1)

- In the load balancer dropdown, select your ALB (e.g., web-application-lb)

- The dropdown will show the full DNS name of your ALB

- Note: The dropdown only appears if your ALB is in the same region you’re currently working in

- For “Routing policy” select “Simple routing” (routes traffic to a single resource)

- Leave “Evaluate target health” checked (this ensures DNS failover if your ALB becomes unhealthy)

- Set TTL (Time To Live) to “60 seconds” (this controls how long DNS resolvers cache this record)

- Click “Create records” to save your changes

- You’ll see a success message and the record will appear in your hosted zone’s record list

- The record will be available almost immediately but may take up to the TTL value to propagate globally

-

Create Record for API Endpoint:

- While still in your domain’s hosted zone, click the “Create record” button again

- For the basic record configuration:

- Record name: Enter

api(this will create api.yourdomain.com) - Record type: Select “A - Routes traffic to an IPv4 address and some AWS resources”

- Record name: Enter

- In the “Route traffic to” section:

- Select “Alias”

- From the endpoint type dropdown, select the appropriate service:

- For API Gateway: Select “Alias to API Gateway API”

- For a separate ALB: Select “Alias to Application and Classic Load Balancer”

- For an EC2 instance with Elastic IP: Select “Alias to Elastic IP address”

- Select your region from the dropdown

- Select the specific resource from the dropdown that appears

- If using API Gateway, ensure you’ve deployed the API to a stage first

- If using a separate ALB for your API, select that load balancer

- Keep routing policy as “Simple routing”

- Keep “Evaluate target health” checked

- Click “Create records” to save your changes

- Verify the record appears in your hosted zone’s record list

- Note down the full domain (api.yourdomain.com) for use in your applications or documentation

-

Set Up Email Records (Optional):

- While still in your domain’s hosted zone, click the “Create record” button again

- For MX (Mail Exchanger) records:

- Record name: Leave empty (for domain-wide email)

- Record type: Select “MX - Routes to mail servers”

- Set a TTL value (usually 3600 seconds/1 hour is appropriate)

- Value/Route traffic to: Enter your mail server information with priority values

- For example, if using Google Workspace (formerly G Suite):

1 ASPMX.L.GOOGLE.COM.5 ALT1.ASPMX.L.GOOGLE.COM.5 ALT2.ASPMX.L.GOOGLE.COM.10 ALT3.ASPMX.L.GOOGLE.COM.10 ALT4.ASPMX.L.GOOGLE.COM.- Note the periods at the end of each domain and the priority numbers at the beginning

- Click “Create records”

- For SPF records (to prevent email spoofing):

- Click “Create record” again

- Record name: Leave empty (for domain-wide policy)

- Record type: Select “TXT - Contains text information”

- TTL: 3600 seconds (1 hour)

- Value: Enter your SPF record, for example:

"v=spf1 include:_spf.google.com ~all"(for Google Workspace)

- Click “Create records”

- For DKIM records (if your email provider gave you DKIM keys):

- Click “Create record”

- Record name: Enter the selector provided by your email service (e.g.,

google._domainkey) - Record type: Select “TXT”

- TTL: 3600 seconds

- Value: Enter the DKIM key provided by your email service (in quotes)

- Click “Create records”

- For DMARC record:

- Click “Create record”

- Record name: Enter

_dmarc - Record type: Select “TXT”

- TTL: 3600 seconds

- Value: Enter your DMARC policy, for example:

"v=DMARC1; p=none; rua=mailto:[email protected]"

- Click “Create records”

- Verify all records appear correctly in your hosted zone

- Test email setup using online email verification tools

Task 4: Configure HTTPS for Your Application

Steps:

-

Update Load Balancer:

- Sign in to the AWS Management Console and navigate to the EC2 service

- In the left navigation panel, under “Load Balancing”, click on “Load Balancers”

- Select your Application Load Balancer from the list (e.g., web-application-lb)

- In the bottom section of the page, click on the “Listeners” tab

- Click the “Add listener” button to create a new HTTPS listener

- Configure the new listener:

- Protocol: Select “HTTPS”

- Port: Enter “443” (standard HTTPS port)

- Under “Default actions”:

- Action type: Select “Forward to target groups”

- Target group: Select your web application target group from the dropdown

- Under “Secure listener settings”:

- Security policy: Select the latest policy available (e.g., “ELBSecurityPolicy-2016-08” or newer)

- The security policy controls the SSL/TLS protocols and ciphers allowed

- For “Default SSL/TLS certificate”:

- Select “From ACM (recommended)”

- Choose your domain’s certificate from the dropdown (the one you created and validated earlier)

- If you don’t see your certificate, verify it’s in the same region as your ALB and fully validated

- Click “Add” to create the HTTPS listener

- The listener creation process takes a few seconds to complete

- When finished, you should see both HTTP (port 80) and HTTPS (port 443) listeners in the list

-

Redirect HTTP to HTTPS:

- In the same Listeners tab, find the HTTP:80 listener in the list

- Click the “Edit” button or select “Edit” from the “Actions” dropdown

- Under “Actions”, click on the existing Forward action and click “Remove” to delete it

- Click “Add action”, then select “Redirect” from the dropdown

- Configure the redirect:

- Protocol: Select “HTTPS” from the dropdown (this changes HTTP to HTTPS)

- Port: Enter “443” (standard HTTPS port)

- Path: Leave as “#{path}” (this maintains the same URL path structure)

- Query: Leave as “#{query}” (this maintains any query parameters)

- Status code: Select “HTTP_301 (Permanent Redirect)”

- 301 is best for production as browsers will cache the redirect